As data becomes one of, if not the most relevant input for companies to generate real business value, Data Engineers have had to rise to the occasion. Their challenge: building increasingly complex data pipelines.

These data pipelines stand at the core of any data product:

These pipelines might process batch data (discrete and usually large amounts of data over a given period, like a day, a week, or a month) or streaming data (continuous amounts of data with no clear discretization or separation ).

Data pipelines are complex enough, but the devil is in the details. The previously mentioned steps must be executed in a specifc order and have dependencies between each other: how to make sure that you have ingested from all your sources before transforming and storing them? What happens if our pipeline fails to ingest one of those sources?

For example, let’s say we want to count the number of new users we’ve got for a given date. We’d build a data pipeline that ingests customer data for the given date from two different databases, transforms this input by counting the number of users, and then stores it in a separate table. It’s easy to see that if we didn’t make sure these tasks were executed sequentially then our output wouldn’t be correct! The same goes for failures: if we fail to ingest from any of its sources then we wouldn’t really be counting all of our users.

And this is a simple scenario: what if our pipeline has even more tasks and dependencies?

Thankfully, there’s a way to avoid all of these headaches: Data Engineers use workflow management tools to help them deal with all of those nasty scenarios.

Not so long ago, in a galaxy not so far away, data pipelines were executed using something like cron jobs (a [simple] job scheduler on Unix-like operating systems) However, data pipelines are becoming more complex with more dependencies and steps. What was once a simple query that selected data from two tables, joined them, and inserted the resulting data into a third table is now a complex workflow of steps with several data pipelines across different data sources and systems.

Since CRON is just a job scheduler that triggers a script or command at a specific time it proved insufficient to support this scenario. You can detect that cron and other job scheduling systems are not enough to manage modern data workflows when you need to develop custom code to handle:

The answer came in the form of automated orchestration tools. In 2014, looking to address challenges in their own workflow management, Airbnb developed Apache Airflow: a tool that would make executing, troubleshooting, monitoring and adapting workflows much easier.

Airflow specializes in executing and coordinating tasks that involve discretely manipulating batches of data. If you’re looking for a tool that can handle streams of data, Apache Airflow is not the tool for you, but they have some recommendations about that!

Let’s go back to the distant past of 2014 for a minute. Our boss asked us to run an ETL job each day at midnight, so as to feed that data into daily reports and visualizations.

You created a trusty cron job in a forgotten instance, and that solution kept your boss happy for a few days. But one morning your boss taps your shoulder (remember this is 2014, so we are all working in open space offices instead of our homes!) and angrily tells you that the reports didn’t get yesterday’s data, right when your boss was supposed to show them to their supervisor.

You check the cron job in your instance but you find nothing, leaving you to scratch your head in confusion. Later in the day you find out that one of the APIs you use to extract data had a small amount of downtime at night, causing your whole pipeline to fail.

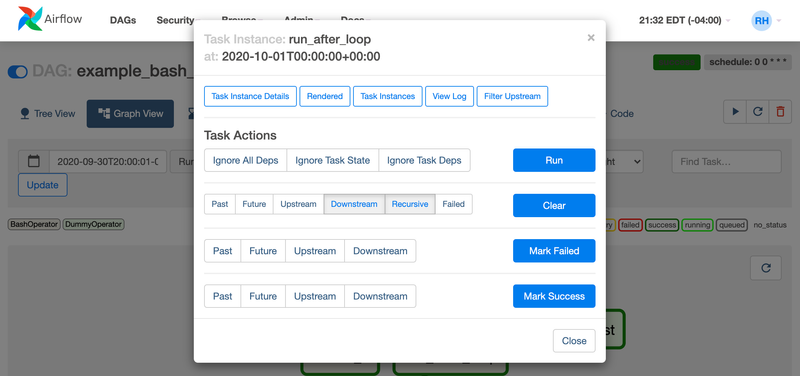

That’s just one of the hidden complexities of creating an orchestration platform: How do I know how and why it failed? How do I define complex dependencies between my tasks? What if I want to retry a few minutes after failure? Is it possible to run multiple of these tasks in parallel? Does it usually fail at the same time/day? How long does it take to run?

It’s hard to ask those features out of a simple cron job!

But Airflow does all of this and more. All of these features come out of the box with Airflow:

You’ll only need to understand the following components, making Airflow extremely simple to pick up:

The entry barrier for using Airflow is low as its main language is Python: the most widely used programming language for data teams.

Working with many clients translates to working with many different requirements and development environments: some use it on the Cloud, others run it on their own private servers. We find being able to easily set up and run Airflow is key to allowing us to deliver value and results efficiently.

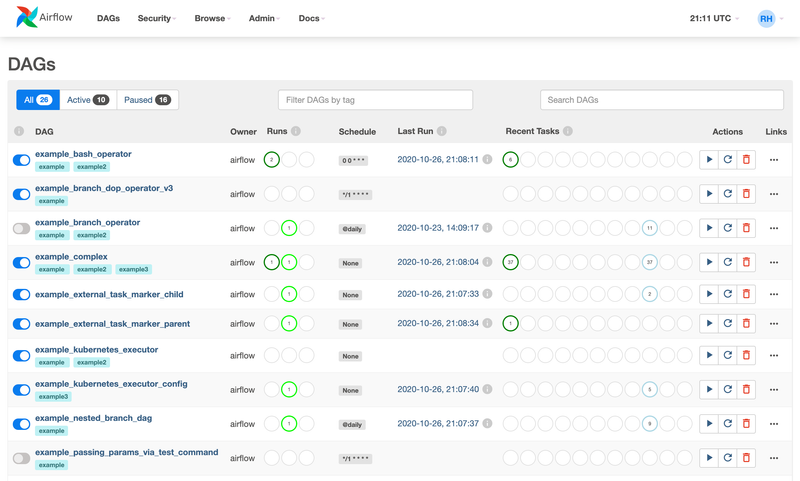

This benefit isn´t limited to programmers. Anyone with access to Airflow´s UI can re-run jobs, check logs and troubleshoot for issues to discover if anything has gone wrong. Its friendly UI and modern web application grants access to a variety of metrics including: performance, execution times, processes status and execution tries. This makes monitoring workflows accessible. Many functions including starting, pausing and ending a workflow can be carried out directly from the application.

Airflow facilitates the execution of tasks in commonly used providers such as Google Cloud, Amazon AWS, and Microsoft Azure. Additionally, instead of manually programming connections you can use the previously defined “Airflow Operators” allowing integration with other systems like databases, mailing alerts, and frameworks.

Airflow allows users to scale horizontally as more tasks or processes are added to the workflow. This way you’ll never be caught by surprise by a sudden compute intensive workload.

But that’s not the only reason why Airflow is scalable. Since Airflow defines all of its workflows as code, DAGs can be dynamically generated. Consuming an outside resource like a database or configuration file will do the trick. Adding a new DAG for your latest product now requires no coding involved, allowing anyone to schedule powerful ETL jobs.

Being able to define workflows as Python code means Airflow enables building higher-level abstractions in a framework-like manner. You can create functions that generate a typical combination of tasks that is frequently repeated to avoid code repetition and simplify changes. Also, you could have a builder object that creates DAGs (Airflow workflows) dynamically from configuration files. This could be achieved by any procedural language but Python being a dynamic language and the defacto standard for data apps is the ideal option. These characteristics and Airflow’s popularity makes it an ideal candidate to use as a baseline to construct a framework to create a DataOps style platform.

You can read more about our experience building DataOps platforms here.

Based on Python and an Open Source community, Airflow is easily extensible and customizable with a robust ecosystem of integrations and extensions.

If you´ve heard about Airflow before this post, you may have also heard about Astronomer. If not, you can read more about them on our latest press release where we announced our technological partnership.

Astronomer delivers orchestration for the modern data platform, and acts as a steward of the Apache Airflow project. Presently at the center of the Airflow community and ecosystem, Astronomer, is the driving force behind releases and the Airflow Roadmap.

Astronomer also offers self-hosted and SaaS managed Airflow with commercial support, building out a full data orchestration platform.

When compared to simply using Airflow, Astronomer stands out by allowing seamless deployment, infinite scalability, greater system visibility, reduced day 2 operation times and, beyond break/fix support from experts.

As part of their goal of growing the Airflow community, Astronomer offers a myriad of useful resources including: talks, podcasts, community forums, slack channels, videos, documents and customer support. They also offer Apache Airflow certifications with training and exams that cover variables, pools, DAG, versioning, templatings, taskflow apis, sub dags, SLAs and many more concepts in order to stand out in the community as an Airflow Expert.

By now, you have probably gathered we like using Airflow and believe the platform delivers many benefits to its users. If you´re interested in learning more, we have taken the liberty of recommending some useful links. Hope you enjoy them!

After getting your feet wet, we recommend taking one of Astronomer’s certifications!

We have successfully built data and machine learning pipelines for adtech platforms, ad real time bidding systems, marketing campaign optimization, Computer Vision, NLP and many more projects using Airflow. Not only do we recommend the tool but we continue to build on our expertise and incentivize our team members to get Astronomer certified.

Need help figuring out the best way to boost your business with data? We are excited to help, you can find out more about us here.

We hope you’ve found this post useful, and at least mildly entertaining. If you like what you’ve read so far, got some mad dev skills, a Data Engineering itch to build modern data pipelines, and like applying machine learning to solve tough business challenges, send us your resume here or visit our lever account for current team openings!

Airflow Machine Learning Data Pipelines Astronomer Big Data Data Engineering