Check out the first part of this post where we explore what technical debt is, whether it’s always a bad thing, and strategies to manage it.

Now, let’s dive back in! Technical debt takes various forms, and businesses may struggle with multiple types simultaneously. Let’s explore the first four types of technical debt, discover the last two in our upcoming final post of the series.

Maintenance debt is generated when data, software, systems, and code are left unattended over time, leading to a backlog of updates, improvements, feature releases, or a buildup of “dead” code.

This debt may accumulate due to a shift in priorities or neglect of data upkeep following the departure of responsible individuals or teams.

It can be seen in unexpected failures, inflexibility in integration, security risks, and performance degradation. These issues arise due to downstream systems working with outdated data, software, legacy systems, or lack of updates.

The primary negative impact; Timing. It’s not the same to apply fixes following a planned schedule or preventive strategy than needing to stop what you’re doing, and failing to meet deadlines to apply unexpected fixes.

Takes place when short-sighted decisions made to achieve short-term goals lead to long-term issues in systems, tools, infrastructure, architecture or the ability to scale. Essentially choices made in favor of short-term gains.

Decision debt can be seen as outdated tech stacks that may be difficult to maintain or scale in the future, selection of limited-feature cloud providers or vendors, product launches without proper documentation, implementations of new solutions with insufficient testing, or selection of cheaper systems at the expense of security and authentication to deliver to market faster.

Blockbuster and Blackberry are examples of technical decision debt. Blockbuster neglected to invest in a digital platform and Blackberry couldn’t keep up with innovations in their market. Short-term gains over long-term maintainability, stability, and scalability.

When the accumulation of different technical debts destabilizes a business’s technological infrastructure. When the underlying infrastructure becomes outdated, unsupported or unstable the entirety of the system will become more prone to bugs, crashes and other types of vulnerabilities.

Many times this becomes visible when scaling, as companies grow and evolve, systems become more and more complex as well as harder to maintain, leading to issues.

This type of debt is directly related to engineering workflows and best practices. Basically, it refers to shortcuts regarding development best practices including contributing, testing, monitoring and alerting that may lead to problems with deployment and building times and processes down the road.

Developer efficiency debt can creep up on you in many different forms. Signs of this type of technical debt may include deployment times that stretch from expected minutes to hours or even days. Or subpar detection practices that allow issues to sneak through the cracks and pop up unexpectedly, sometimes even post-release. For example, encountering bugs after new features have been released is a clear indication of this type of debt.

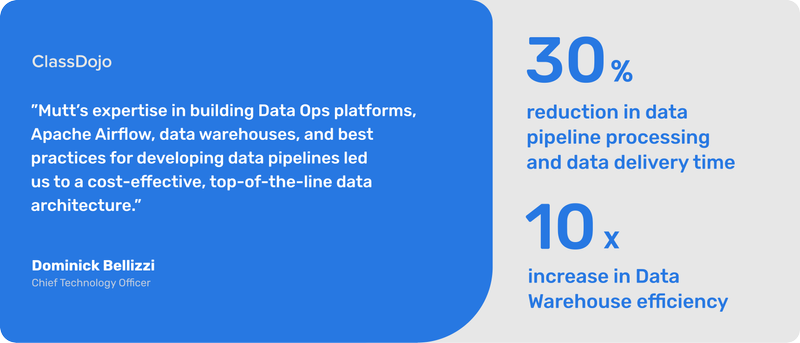

Let’s dive deeper with a real-life example of a company dealing with multiple types of technical debt simultaneously and some actions taken by Mutt Data to tackle said debt. Here’s a snapshot of our recent work in the EdTech Industry.

Startups prioritize market fit and time to market over careful analysis of architecture, tools, or practice fundamentals, leading to technical debt. This project was no exception, as the focus was on getting features to production quickly with a rollback option if needed.

To solve this debt, we applied a thorough Discovery process, investigating the client’s industry, business, and strategy to provide advice on technical capabilities, allowing us to uncover deeper root issues and debt. We then classified issues and debt, prioritized, and designed an action plan. Our findings? Data availability was decreasing, pipelines were cost-ineffective, and developers were acting as a bottleneck for analytics. There were various types of tech debt acting together.

TLDR: The company was prioritizing the short-term over long-term scalability. The choice of tools were based on familiarity and comfort not long run maintainability.

Development had become inefficient, there was no clear ownership roadmap in place, there were too many dependencies between employees creating friction, and a lack of a single self-serve source of truth was a clear issue.

TLDR: A lack of data governance, quality, anomaly detection, monitoring, and testing tools and best practices were causing delays in developing and processing times impacting the data analytics operation. The quality of data was not certain, issues were sneaking through the cracks.

To solve this debt we did the following:

Many of the changes required to fix developer debt were being blocked by a lack of updates in current tools or systems. This showcases the negative effect overlooking updates and implementing timely fixes can have on your agile capability of change, iteration, application, etc. To fix it we:

The company had grown, making systems complex and harder to maintain. An outdated infrastructure, lack of Data lake or similar centralized structure, and unsupported systems were generating issues and long-running queries with extensive execution times.

The most important learning we got is the implementation of data quality and engaging developers to be aware and care about it. After that, data governance tools (mention to DataHub) could bring even bigger advantages mixing up with data quality and observability features.

Needless to say, this was a shortened case study for the sake of keeping this somewhat summarized. You can check out the in-depth case study from ClassDojo’s Point Of View, from Our Point of View or from Amazon Web Service’s Point Of View.